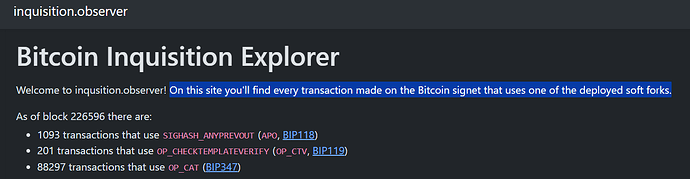

BIP 118 (SIGHASH_ANYPREVOUT, APO) and BIP 119 (OP_CHECKTEMPLATEVERIFY, CTV) have been enabled on signet for about two years now, since late 2022. More recently, BIP 347 (OP_CAT) was also activated – it’s been available for about six months now.

Here’s a brief investigation into how they’ve been used:

APO

-

Most of the transactions were spends of the coinbase payout, and can be observed as spends of address tb1pwzv7fv35yl7ypwj8w7al2t8apd6yf4568cs772qjwper74xqc99sk8x7tk. All those spends reuse the same APOAS signature, spending multiple block rewards back to faucet addresses, generally with very large amounts lost to fees.

-

There are a handful of spends with the script

1 OP_CHECKSIG, ie signed by the internal public key: -

There are substantially more spends using APO as an overridable CTV in the second quarter of 2023, with a script of the form

<sig> 1 OP_CHECKSIG– spending via the script path means that you have to satisfy the fixed signature, so are limited in how your tx is structured, however since the IPK was used to generate that signature, it could equally be used to generate a key path signature with no restrictions. They conclude around the time that bips PR#1472 was filed.- Sat, 04 Mar 2023 18:41:03 +0000

- Sun, 05 Mar 2023 15:44:51 +0000

- Thu, 09 Mar 2023 00:14:56 +0000

- Thu, 09 Mar 2023 00:36:27 +0000

- Wed, 05 Apr 2023 13:32:50 +0000

- Wed, 05 Apr 2023 19:54:51 +0000

- Thu, 06 Apr 2023 00:07:20 +0000

- Mon, 10 Apr 2023 01:23:03 +0000

- Sat, 22 Apr 2023 22:09:05 +0000

- Sat, 22 Apr 2023 22:58:28 +0000

- Sat, 22 Apr 2023 23:14:46 +0000

- Sun, 23 Apr 2023 00:57:13 +0000

- Sun, 23 Apr 2023 07:04:50 +0000

- Sun, 23 Apr 2023 07:37:48 +0000

- Sun, 23 Apr 2023 11:58:23 +0000

- Sun, 23 Apr 2023 12:37:50 +0000

- Sun, 23 Apr 2023 13:29:34 +0000

- Sun, 23 Apr 2023 15:28:57 +0000

- Sun, 23 Apr 2023 15:55:32 +0000

- Sun, 23 Apr 2023 19:46:44 +0000

- Sun, 23 Apr 2023 20:20:17 +0000

- Sun, 23 Apr 2023 23:39:36 +0000

- Mon, 24 Apr 2023 11:51:02 +0000

- Mon, 24 Apr 2023 12:27:22 +0000

- Mon, 24 Apr 2023 20:11:49 +0000

- Mon, 24 Apr 2023 20:23:54 +0000

- Mon, 24 Apr 2023 20:34:18 +0000

- Mon, 24 Apr 2023 21:01:51 +0000

- Mon, 24 Apr 2023 21:12:31 +0000

- Tue, 25 Apr 2023 03:58:02 +0000

- Tue, 25 Apr 2023 04:16:09 +0000

- Wed, 26 Apr 2023 13:53:41 +0000

- Thu, 27 Apr 2023 14:47:01 +0000

- Thu, 27 Apr 2023 15:50:18 +0000

- Fri, 28 Apr 2023 05:49:20 +0000

- Fri, 28 Apr 2023 17:13:04 +0000

- Sat, 29 Apr 2023 21:49:12 +0000

- Fri, 05 May 2023 03:39:18 +0000

- Fri, 12 May 2023 17:31:59 +0000

- Fri, 12 May 2023 19:45:54 +0000

- Wed, 17 May 2023 23:39:08 +0000

- Thu, 18 May 2023 00:08:57 +0000

- Tue, 30 May 2023 22:09:58 +0000

- Wed, 31 May 2023 19:30:50 +0000

- Wed, 31 May 2023 19:37:34 +0000

- Wed, 31 May 2023 19:53:48 +0000

- Tue, 06 Jun 2023 23:40:17 +0000

- Wed, 07 Jun 2023 00:23:01 +0000

- Wed, 07 Jun 2023 17:55:42 +0000

-

A couple of tests of ln-symmetry were conducted, which use two APO-based script templates; one is a simple APO-based spend using the IPK, ie

1 CHECKSIG, that also includes an annex commitment that provides enough information to reconstruct the following tx script: -

The other is a CTV-like construction

<sig> <01;G> CHECKSIGwhich uses the curve generator for the signature, as the IPK is a musig point, and isn’t available for signature generation at the time the tx is constructed:

That’s the extent of APO-based usage on signet to date to the best of my knowledge. It’s possible that further ln-symmetry tests will be possible soon, with all the progress in tx relay that’s been made recently.

CTV

-

The first pair of CTV txs have a “a committed set of outputs immediately, or anything after a timeout pattern”, also requiring a signature for the specific path chosen: “

IF <height> CLTV DROP <key> CHECKSIG ELSE <hash> CTV DROP <key> CHECKSIG ENDIF”. These scripts are similar to the simple-ctv-vault unvault script, though that uses CSV rather than CLTV and does not require a signature on the CTV path. -

This was followed up by another pair that restricted the CTV path by also requiring a preimage reveal as well as a signature: “

IF <height> CLTV DROP <key> CHECKSIG> ELSE SHA256 <hash> EQUALVERIFY <hash> CTV DROP CHECKSIG ENDIF” -

There are a couple of examples of bare ctv hash, ie the scriptPubKey was “

<hash> CTV”, with not scriptSig or witness required when spending.- Thu, 25 Jan 2024 14:14:50 +0000

- Fri, 26 Jan 2024 18:19:27 +0000 - “hello world”

-

There are a handful of p2wsh spends where the witness script was a simple “

<hash> CTV”. These are pretty generic, so could be anything -

Also p2wsh “

<hash> CTV”, but with important messages in OP_RETURN outputs:

And that’s it. I didn’t see any indication of exploration of kanzure’s CTV-vaults which uses OP_ROLL with OP_CTV, or the simple-ctv-spacechain, which uses bare CTV and creates a chain of CTV’s ending in an OP_RETURN.

CAT

There are substantially more transactions on chain (74k) that use it than either APO (1k) or CTV (16) due to the PoW faucet, which adds three spends per block, so it’s a bit harder to analyse. Linking to addresses rather than txids, I think they sum up as:

- PoW faucet:

- STARK verification:

- tb1pnpxhs2syr62zkxgry0xv44zn84jg9dwg8jhp4gjefv9gh3ysmmssjxlyqy – google points at this article: Recent Progress on Bitcoin STARK Verifier

- tb1pn7zprl7ufprqht03k7erk2vtp78rqdj9vw4xs95qcee7v7ge0uks2f3u48 – google links to a tweet instead

- tb1p2jczsavv377s46epv9ry6uydy67fqew0ghdhxtz2xp5f56ghj5wqlexrvn gives an earlier tweet

- looks to be a CAT/CHECKSIG-based introspection, perhaps for vault or covenant behaviour?

- tb1p499mmrdadtyh47rw2220t9f2mteyszd352f22ajtx3mp5sdwqj3sqwdm0n

- tb1pccmgyhanq2fpr2ru4pnm9xyknlmel9h6a8dlgaaz6gq5w4vw096qmj6xtw

- tb1pcjzr4fq7gzcxmtnfdg8c293ej2mhqf2s0svj9eltjx3ze0f4v9pq00x3y4

- tb1pn8ekwyu4gw9lqa373e9tmq6xm9pn7gf7pgyr6v75rty5j7frz5ysjde8sn

- tb1pdz3pakln88567wetz3tjjc3vgghhxqwdlaya2xwweuspt63eveusgm9rg9

- tb1p2mrsstfsz6vc8kraaf3nn0wdg3lmj60e6wq7sacfj07a0uxkpuqqwz2czy

- tb1pzlhwsacu5ucf04wnws27elj2pyt0nthcv9c2njwu35jl6k0cxp9ssyuje3

- tb1pjhwjqw9gvtv0jvne8x7y85vzrp0yueu2xsfkyak222l5k564lg7qrqf0re

- tb1ppfdtz8eufft30gswdzfa39lq0adc6dg937n09krrwxa3cnlclfjqhlmsaa

- tb1pt3u4mgyg3xze6js2unn298tase5xmj5n45e9gexfmm4mecjq98lq90h2jt

- tb1prcx703vp59q7sa7y0759a99vl7fjfmhuarnqww45u04s9vpmx8dsxm44jl

- tb1pzz7xpljawrl6tg0h0w0shn98vfga5v4txn9qwy85a6rvvpx7datq7l4sp7

- tb1p8gqmhv6scsnxy40yxqxp8zjxshhm2mc5sgtkmyxae3yrsjkxd24qqjxf25

- tb1pqkuq43vm5qe8naptpehe6qph7kh3mgtl4wr9mnk46pet0gk3sl4qjltsxl

Addendum

I tracked these by hacking up a signet node that tries to validate block transactions with all of CTV/APO/CAT discouraged, and on failure retries with CTV and APO individually enabled, logging a message hinting at what features txs use. Could be interesting to turn that into an index that could track txs that use particular features a bit more reliably.

patch

--- a/src/validation.cpp

+++ b/src/validation.cpp

@@ -2663,13 +2663,22 @@ bool Chainstate::ConnectBlock(const CBlock& block, BlockValidationState& state,

std::vector<CScriptCheck> vChecks;

bool fCacheResults = fJustCheck; /* Don't cache results if we're actually connecting blocks (still consult the cache, though) */

TxValidationState tx_state;

- if (fScriptChecks && !CheckInputScripts(tx, tx_state, view, flags, fCacheResults, fCacheResults, txsdata[i], m_chainman.m_validation_cache, parallel_script_checks ? &vChecks : nullptr)) {

- // Any transaction validation failure in ConnectBlock is a block consensus failure

- state.Invalid(BlockValidationResult::BLOCK_CONSENSUS,

- tx_state.GetRejectReason(), tx_state.GetDebugMessage());

- LogError("ConnectBlock(): CheckInputScripts on %s failed with %s\n",

- tx.GetHash().ToString(), state.ToString());

- return false;

+ auto xflags = flags | SCRIPT_VERIFY_DISCOURAGE_CHECK_TEMPLATE_VERIFY_HASH | SCRIPT_VERIFY_DISCOURAGE_ANYPREVOUT | SCRIPT_VERIFY_DISCOURAGE_OP_CAT;

+ if (fScriptChecks && !CheckInputScripts(tx, tx_state, view, xflags, false, false, txsdata[i], m_chainman.m_validation_cache, nullptr)) {

+ if (CheckInputScripts(tx, tx_state, view, (xflags ^ SCRIPT_VERIFY_DISCOURAGE_CHECK_TEMPLATE_VERIFY_HASH), false, false, txsdata[i], m_chainman.m_validation_cache, nullptr)) {

+ LogInfo("CTV using transaction %s\n", tx.GetHash().ToString());

+ } else if (CheckInputScripts(tx, tx_state, view, (xflags ^ SCRIPT_VERIFY_DISCOURAGE_ANYPREVOUT), false, false, txsdata[i], m_chainman.m_validation_cache, nullptr)) {

+ LogInfo("APO using transaction %s\n", tx.GetHash().ToString());

+ } else if (CheckInputScripts(tx, tx_state, view, flags, fCacheResults, fCacheResults, txsdata[i], m_chainman.m_validation_cache, nullptr)) {

+ LogInfo("CTV/APO/CAT using transaction %s\n", tx.GetHash().ToString());

+ } else {

+ // Any transaction validation failure in ConnectBlock is a block consensus failure

+ state.Invalid(BlockValidationResult::BLOCK_CONSENSUS,

+ tx_state.GetRejectReason(), tx_state.GetDebugMessage());

+ LogError("ConnectBlock(): CheckInputScripts on %s failed with %s\n",

+ tx.GetHash().ToString(), state.ToString());

+ return false;

+ }

}

control.Add(std::move(vChecks));

}