keep in mind that miners have shares mined by themself in the slice and they know the index of them: share-accounting-ext/extension.md at 281c1cbc4f9a07b21a443753a525197dc5d8e18c · demand-open-source/share-accounting-ext · GitHub

we can add the index in the merkle root I think that would be better

A miner can solve this issue by asking the pool a bunch of consecutive shares, containing some produced by the miner itself. Since a miner knows the position in the slice of the shares he produced, becomes very difficult to perform the trick you said. BTW I cannot see the incentive for doing so. I think the most likely scenario is when the pool is a miner and produces fake shares. Recall also that this issue affects also standard PPLNS and has nothing to do with job declaration.

think the most likely scenario is when the pool is a miner and produces fake shares.

Exactly this.

Recall also that this issue affects also standard PPLNS and has nothing to do with job declaration.

You’re right, the issue I describe is orthogonal to this proposal (which I like a lot!).

we can add the index in the merkle root I think that would be better

You could use the share index to determine Merkle tree ordering for the slice, this would prevent the scenario I outlined above. IIUC, this would also give the client some idea as the what the Merkle path should look like for a given share.

this would also give the client some idea as the what the Merkle path should look like for a given share.

If the Merkle tree ordering is pre-determined by what indeed it is in a slice, then you can omit that from the Share data struct.

main issue is that you can not cache the hashes and for each window you need to recalculate everything.

for the Merkle tree?

yep cause index depend on window, so slice root would be different based on the window on which belong. I have to look better into it, but it seems feasible.

@lorbax I have a question about the last formula of Section 3.3.

Can you demonstrate this?

\sum_{j=k_1}^{k_2} score_f(s_j) + ... + \sum_{j=k_{t-1}}^{k_t} score_f(s_j) = t

From an intuitive perspective it makes sense, but I’m having trouble to visualize this clearly in my mind.

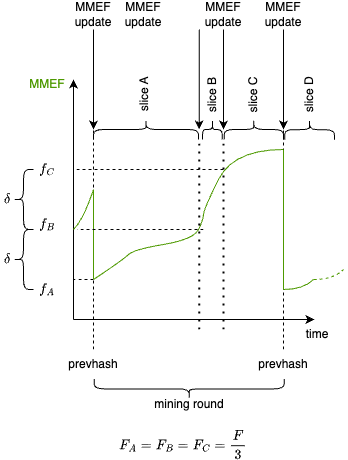

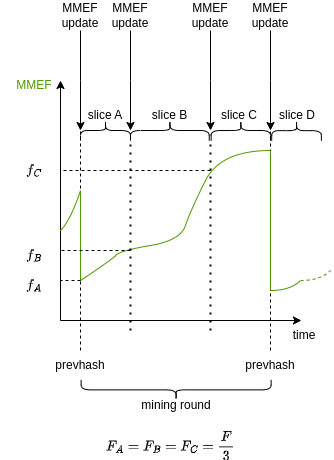

While I read the paper, I created some visuals to organize my thoughts.

If the information is accurate and the authors feel they are useful, they are free to use and modify them without attribution.

There is a typo. There should be 0=k_0<k_1<\dots < k_t = N, where N is the number of shares in the PPLNS window such that the m-slice contains the shares s_{k_{m-1}+1}, \dots , s_{k_{m}}. Recall that, by Remark 5 in Section 3.2, if S is a slice, we have that \sum_{s \in S} score_f(s) =1. Hence

I slightly changed the share definition share-accounting-ext/extension.md at 8cf48767de3a477ab2b11ada8fb3f05c19cce758 · demand-open-source/share-accounting-ext · GitHub

- Remove merkle_path from the share, the field is redundant cause we can derive it from the transactions in the job, later in the verification procedure.

- Add share_index: the index of the share in the slice. This solve the issue raised above by @marathon-gary (there is no way for a miner the check if the share sent by the pool are the ones required)

Is there a minimum threshold for Merkle inclusion that a miner would want to challenge the pool for? mathematically or statistically speaking?

Is the delta for determining a new slice dynamic or static? I suppose that’s left up to the pool or implementer of the payout schema, but am curious about that.

@plebhash I think I was wrong in our discussion about this.

The pool can decide it, there no way to communicate it in the protocol, so is something static that pool and miners have to agree before.

not sure, I guess that depends on the number of miners that use the pool

Hi! Just to mention that a new version of the article is available. I would like to thank @plebhash and @marathon-gary, that reviewed the article and made a lot of useful suggestions/corrections

Update ext spec, add PHash data type, needed by the miner to verify the share’s work

The PHash message includes the previous hash and the starting index of the slices that use it. This message is sent within the GetWindowSuccess response, enabling the miner to identify which slices correspond to which previous hash, and thereby determine the previous hash for each share. This information is essential for verifying the work of each share.

I made some adjustments to this graph.

Now the \delta steps are explicitly clear on the MMEF axis.