Hi everyone. I recently wrote an analysis about the security of skipping assumed-valid witness downloads for pruned nodes. This possibility was first mentioned in Segregated Witness Benefits, but at the time assume-valid didn’t exist. Then, two years ago, after this BSE question, a PR was opened in Bitcoin Core to gather feedback on this, but there were some concerns about this being a security reduction.

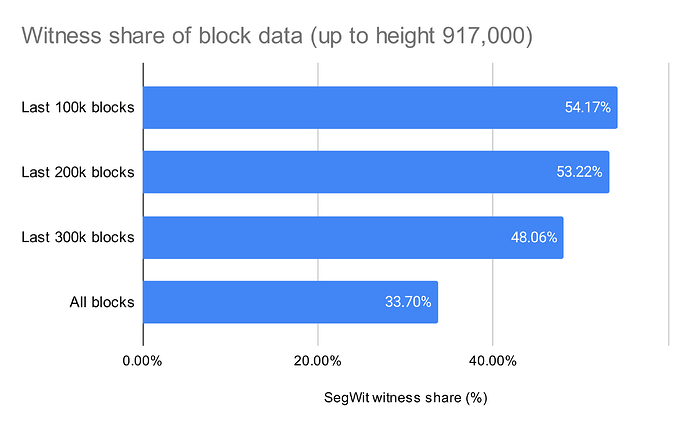

This witnessless sync for pruned nodes would reduce bandwidth usage by more than 40%, which translates to hundreds of GBs saved during IBD. These network savings compound nicely with assume-valid, as the bottleneck is less likely to be the CPU, and now also the bandwidth. As shown in the PR, implementing this was relatively straightforward.

Below I will summarize what I found, but you can read the full writeup here: Witnessless Sync: Why Pruned Nodes Can Skip Witness Downloads for Assume-Valid Blocks · GitHub

The main concern about Witnessless Sync was that we don’t check the witness data availability before syncing (as we skip downloading it, for assume-valid blocks), but I argue it is already implicitly checked by assume-valid:

- If you use

assume-validyou trust that the scripts are valid. - In order for the scripts to be valid, the witnesses must have been available. Missing witness data means script evaluation fails, which we assume not to be the case because of 1.

- Hence, you do know the witnesses were available at some point, because it is a premise of

assume-valid.

Then, using this fact, you can see how Witnessless Sync follows the same data-availability model as a regular pruned node. Pruned nodes only need a one-time data availability check, performed during IBD. After that, they aren’t required to download the same blocks after x months/years to verify the data is still available.

Since our Witnessless Sync node already has this one-time past availability check covered by assume-valid, downloading witnesses is actually checking availability twice. AFAIK this is not required for pruned nodes, even if the data availability check (IBD) was performed many years ago.

This is why I believe this change is as safe as a long-running pruned node (you know data was available at some point in the past, and that’s enough). I’d love to hear any thoughts or criticisms you might have!