I’ve recently looked at compact block reconstruction statistics from the debug.log’s of my monitoring nodes. Particularly, at how many blocks require an extra getblocktxn → blocktxn round-trip as the node does not know about transactions during block reconstruction. I thought I’d share my data and a few observations here.

My nodes have debug=cmpctblock logging enabled. This allows me to grep for compact block reconstructions. The log output contains information on the number of transactions pre-filled in the compact block (here 1, the coinbase transaction), how many transactions the node could use from its mempool (3986), how many transactions were used from the extra pool (9) and how many were requested (6).

[cmpctblock] Successfully reconstructed block 000000000019d6689c085ae165831e934ff763ae46a2a6c172b3f1b60a8ce26f with 1 txn prefilled, 3986 txn from mempool (incl at least 9 from extra pool) and 6 txn requested

While the monitoring nodes have slight differences in their node configuration, they mostly use Bitcoin Core defaults. All monitoring nodes accept inbound connections and the default inbound slots are usually full. I set maxconnections=1000 on node dave - it usually has about 400+ inbound connections. On 2024-05-08, I switched node frank to blocksonly=1, which means it won’t do block reconstructions anymore. I switched erin to mempoolfullrbf=1 on 2024-07-26.

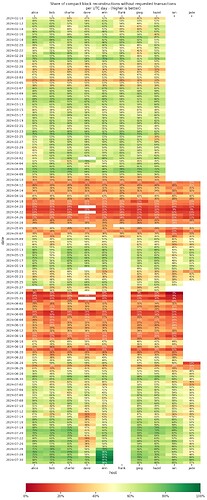

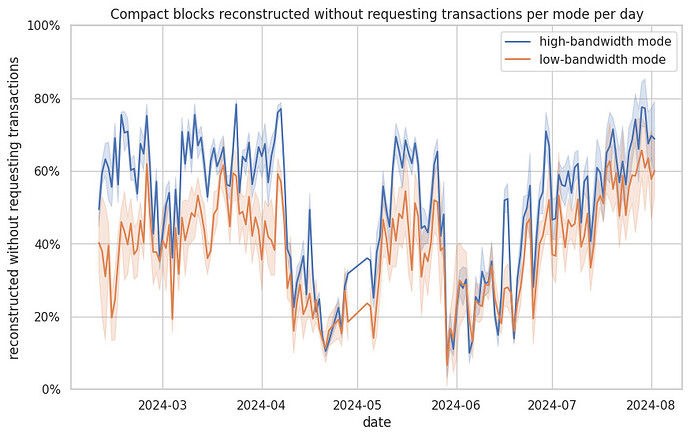

The following shows the share of block reconstructions that didn’t need to request transactions - higher is better.

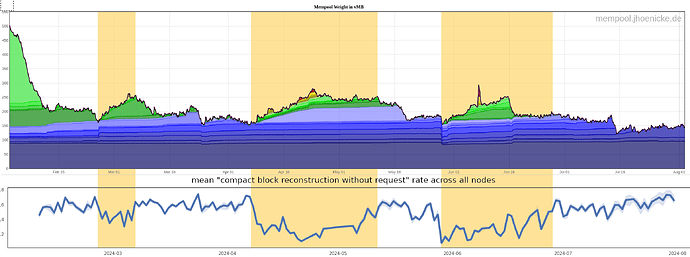

I observed three periods with a 50% or less “compact block reconstruction without transaction request” rate across all nodes:

- End of February till early March

- Mid April till early May - especially bad around the halving.

- End of May till mid June - especially bad around end of May

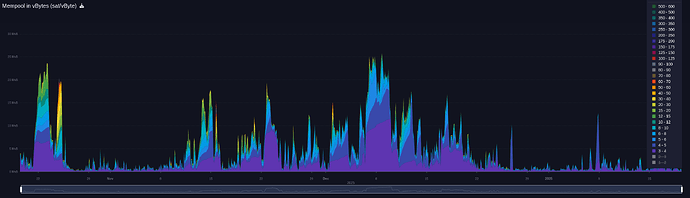

This seems to correlate with increasing mempool activity.

Node dave under performing between 2024-07-10 and 2024-07-27 correlates with it rising from about 320 to nearly 500 inbound connections. It was restarted on 2024-07-27 and inbound connections were reset.

During other periods were dave had similar numbers of inbound connections, it didn’t seem to have had an impact on block reconstruction.

As noted in my review on policy: enable full-rbf by default, enabling mempoolfullrbf=1 on node erin significantly reduced the compact block reconstructions where the node had to request transactions. This seems to indicate that most pools now have policy different from the default Bitcoin Core policy. Turning on mempoolfullrbf by default could improve block reconstruction and thus reduce block propagation time across the network.

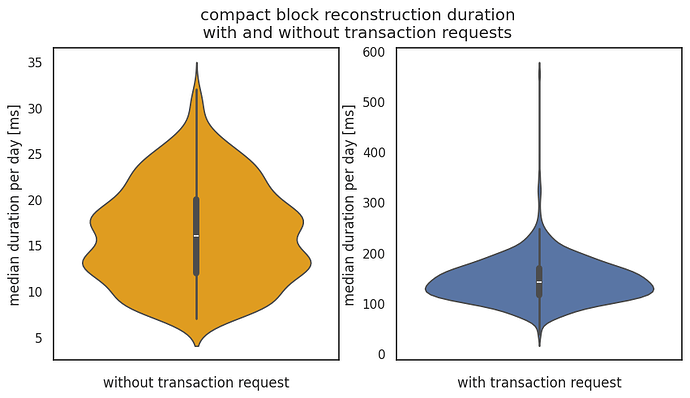

block reconstruction duration

I was curious how long it takes to reconstruct a block when we have to request transactions and when we don’t have to. Note that these nodes are located in well connected data centers with reasonably good hardware, YMMV.

I looked at the timings of block reconstructions in high-bandwidth mode. In low-bandwidth mode, Bitcoin Core does not log new cmpctblock header. I took the new cmpctblock header log timestamp (with logtimemicros=1) as start time and the Successfully reconstructed block timestamp as end time.

When no transactions need to be requested, median block reconstruction time on my hardware is usually around 15ms and about 130ms when transactions need to be requested.

note the different y-axis!further questions

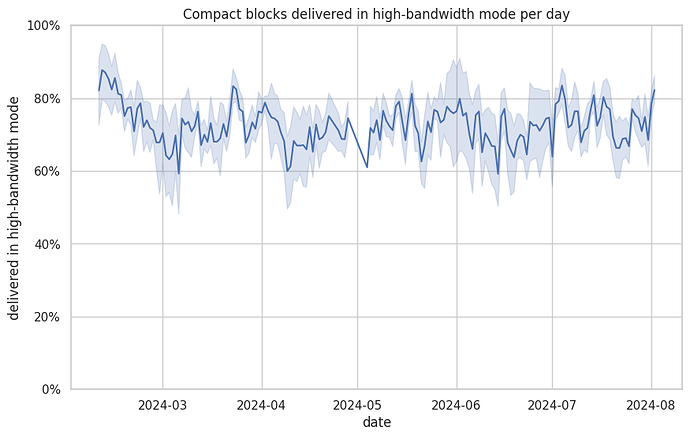

I had the impression that low-bandwidth block reconstructions more often needed to request extra transactions compared to high-bandwidth reconstructions. I’m not sure if that would be expected. I think I have the data to look into it. Additionally, the share of low- vs high-bandwidth reconstructions over time would be interesting.

How do different extra pool sizes affect block reconstruction? How was the block reconstruction performance before miners started to enable full-rbf? Does anyone have historic debug=cmpctblock debug.logs for analysis? Also, all my (current) nodes have inbound connections. Looking at an outbound-only node in comparison could also be interesting.